DeepSeek’s AI Uses 10x Less Power: A Revolution or Just Hype?

Last month, DeepSeek ignited a global conversation by claiming its AI model operates at roughly one-tenth the computing power of Meta’s Llama 3.1. This assertion directly challenges the prevailing understanding of the colossal energy and resource demands typically associated with developing cutting-edge artificial intelligence. The implications of such efficiency gains are monumental, particularly as the tech industry grapples with the escalating environmental footprint of its rapidly expanding AI infrastructure.

The environmental benefits, at first glance, appear transformative. As tech giants worldwide compete to construct enormous data centers some with electricity demands comparable to entire small towns—concerns about AI’s contribution to climate change, including increased pollution and immense pressure on power grids, have reached a critical juncture. Any reduction in the energy required for both training and running sophisticated generative AI models represents a significant advantage in the pursuit of more sustainable technology. DeepSeek’s potential to dramatically lower these energy requirements offers a beacon of hope for a greener AI future.

DeepSeek has been gaining considerable traction since the launch of its V3 model in early December. According to the company’s detailed technical report, the final training run for this model incurred a cost of $5.6 million and consumed an estimated 2.78 million GPU hours, utilizing Nvidia’s H800 chips. This stands in stark contrast to Meta’s Llama 3.1 405B model, which reportedly demanded approximately 30.8 million GPU hours on the newer, more efficient H100 chips. Industry experts estimate the costs for training similar large-scale models range between $60 million and an astounding $1 billion, highlighting the profound cost-efficiency DeepSeek appears to have achieved.

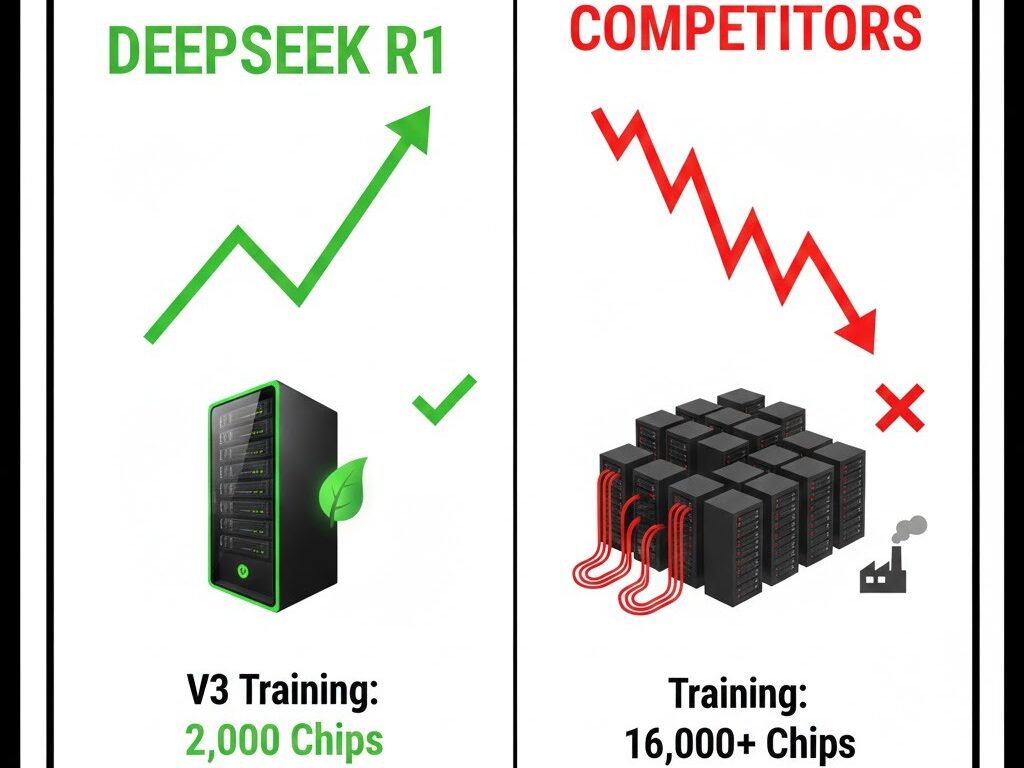

The momentum surrounding DeepSeek intensified further last week with the highly anticipated release of its R1 model, which venture capitalist Marc Andreessen lauded as “a profound gift to the world.” Just days after topping both Apple’s and Google’s app stores, the potential threat to competitors’ stock prices vividly illustrated the industry-shaking implications of a lower-cost, energy-efficient AI alternative. Notably, shares of Nvidia experienced a sharp decline following revelations that DeepSeek’s V3 model required only 2,000 chips for training, a significant reduction compared to the 16,000 or more typically utilized by its rivals for similar scale models.

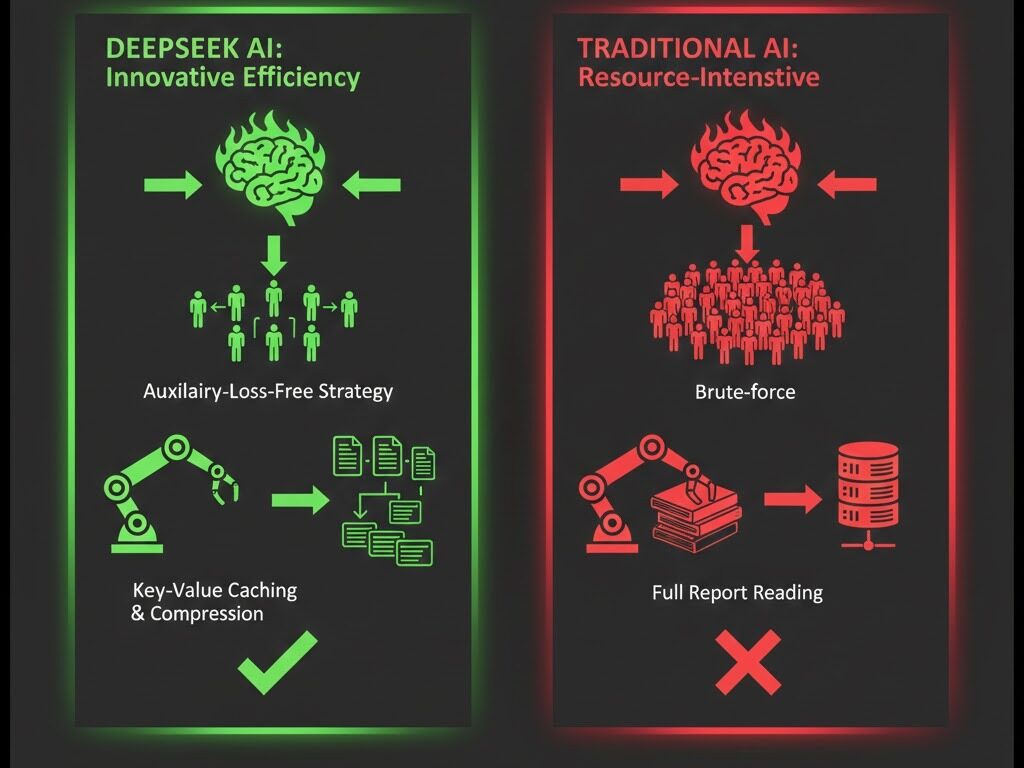

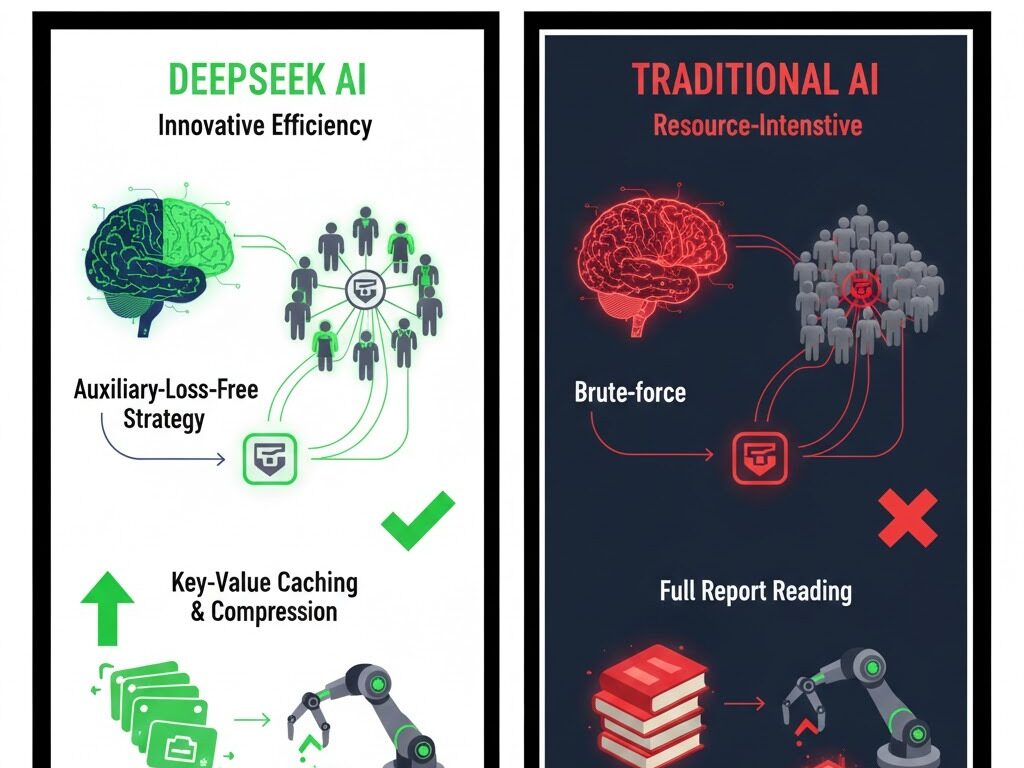

DeepSeek attributes its remarkable efficiency gains to a suite of innovative training methodologies. The company asserts that it achieves reductions in energy consumption by orders of magnitude through its “auxiliary-loss-free strategy.” This sophisticated approach involves selectively training only specific, relevant parts of the model at any given time, which can be analogized to a customer service team choosing the right expert for a particular query rather than engaging the entire team. Further significant energy savings are realized during the inference phase via advanced key-value caching and compression techniques, effectively allowing the model to consult summarized index cards rather than having to re-read extensive full reports, leading to faster and less resource-intensive operations.

In conclusion, energy experts, such as Madalsa Singh, a postdoctoral research fellow at the University of California, Santa Barbara, express a positive yet cautiously optimistic outlook on DeepSeek’s innovations. “It just shows that AI doesn’t have to be an energy hog,” she remarks, emphasizing that sustainable AI represents an excellent and viable alternative. However, a degree of uncertainty still surrounds these claims. Carlos Torres Diaz, head of power research at Rystad Energy, highlights the current lack of concrete, independently verifiable data regarding DeepSeek’s precise energy consumption. Meanwhile, Philip Krein of the University of Illinois Urbana-Champaign offers a cautionary note, suggesting that increased efficiency, while beneficial, could paradoxically spur a surge in AI deployment—a phenomenon famously known as Jevons’ paradox, where efficiency gains lead to increased consumption.